We missed BPO wave. We are still missing the cloud wave. Can we ride the Big Data wave, the next big opportunity in IT industry?

As per the research Big Data will reach $46.34 Billion by 2018.

The big player like India is already in the market, they are using their existing outsourcing channels to take a stake of this industry from their allies. InfoSys has created a BigData platform called BigDataEdge, similarly Wipro has developed a Big Data framework Called– ‘3B framework’. HCL, Happiest Minds, TATA all are grooming their skill to ride this wave.

What about Bangladesh?

We still don’t have a single professional training center in Bangladesh for IT training, who can deliver courses in a professional manner. Not a single University in the country is offering any Big Data courses. While this opportunity is massive, the shortage of skill people is a very big challenge.

On the other hand we have a strong young educated computer professions whom we can use to learn this topic. And the TELCOs- who are really dealing with huge amount of data should start sending their IT personnel for a training on this topic.

Huge opportunity of Skill Labor export

According to McKinsey, the U.S. alone faces a shortage of 140,000 to 190,000 analysts and 1.5 million managers who can analyze Big Data. To catch this opportunity, countries local universities should start offering Big Data courses, they can also join with other universities in the world who are offering those courses, to get an expert help.

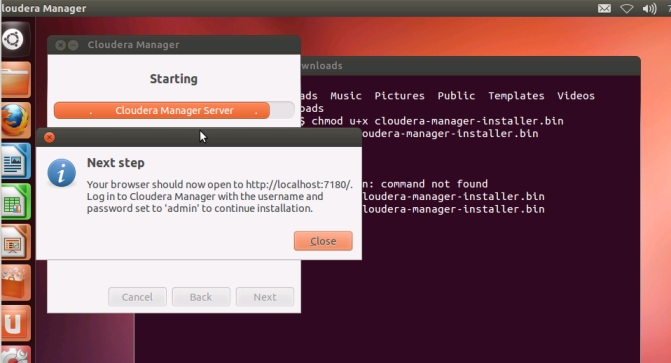

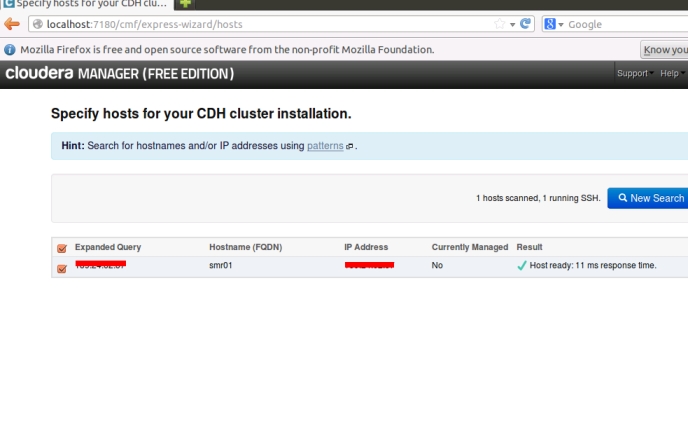

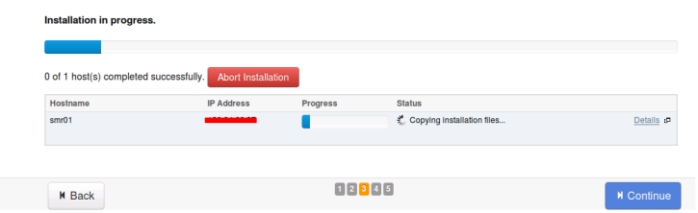

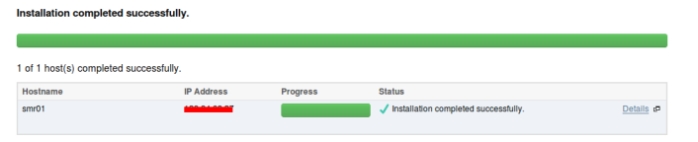

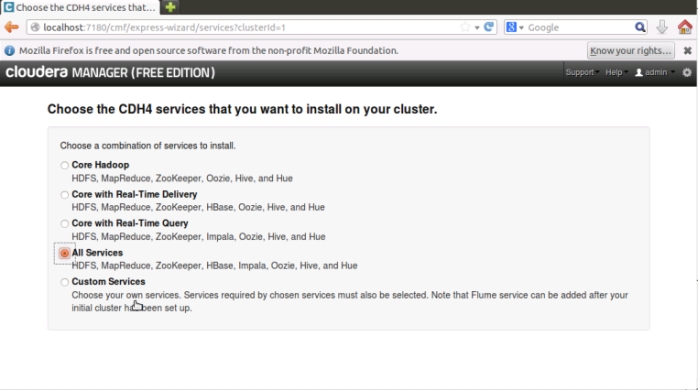

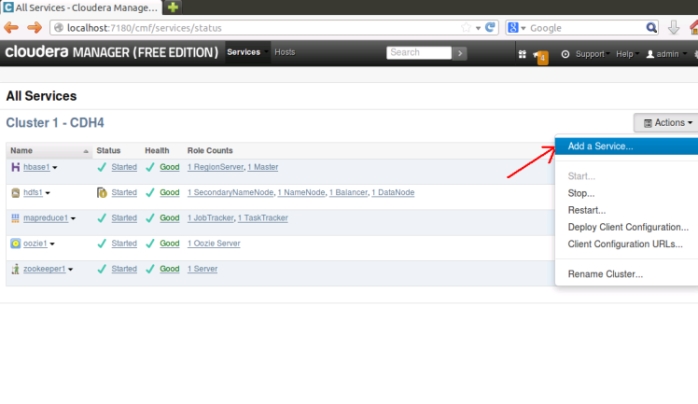

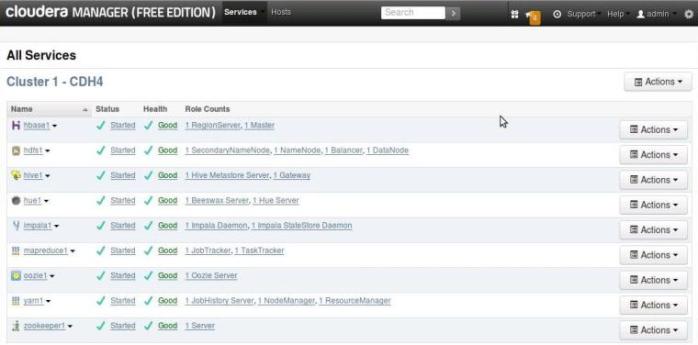

Local IT training provider – like AMS, New Horizon should offer Big Data training to attract IT professionals who are already experienced and can jump into this new profession after getting a professional training. Training providers should look for a join venture with EMC, IBM, Cloudera, Hortonworks to make their Big Data courses available in Bangladesh.

Huge opportunity for IT Service Industry

The local IT Service companies like Brac IT Service Ltd., Computer Services Ltd. and many more are still struggling in IT Service industry. Those companies have a huge financial backup from their big brothers like Brac NGO, or Computer Services PC sales division but wonder to see still they are struggling to have a sustainable business portfolio in IT Service business.

I would request you all to explore outside world, have some joint business deal with market leaders in Big Data industry, like Teradata, IBM, EMC, Cloudera and Hortonworks. We don’t have the time or justification to reinvent the wheel, it is time to work with the big brothers shoulder by shoulder to learn and get a stake from them. Wipro the IT giant also did the same in 1988 by creating a joint venture with United States’ General Electric.

Hoping for a shiny Bangladesh!!