Due to some security restriction you will see access denied error message while transferring file to HDFS from your local file system. You can follow the below steps to copy file from local system to Hadoop file system.

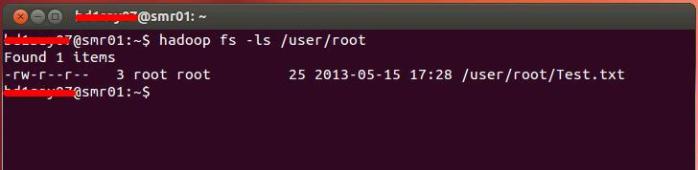

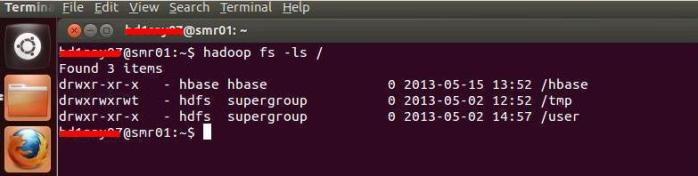

At first check the current directory status

1. Open terminal

2. Enter the below command

hadoop fs -ls /

To create a directory under user folder enter the below command (here root is the directory name)

sudo -u hdfs hadoop fs -mkdir /user/root

After creating the directory, assign permission to that directory so that root user can copy data to hadoop file system.

sudo -u hdfs hadoop fs -chown root:root /user/root

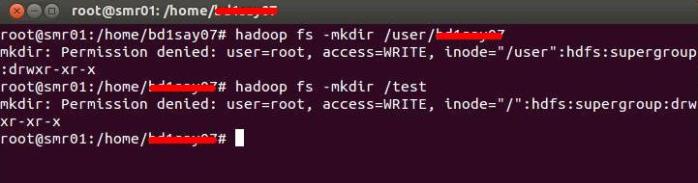

If you use the normal hadoop mkdir command without using sudo command you may see the below permission denied error.

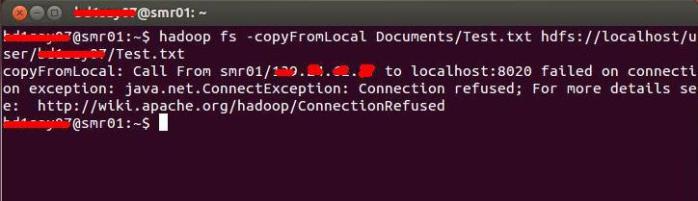

To copy file enter the below command (assuming that you have a file in your Documents folder name Test.txt and you want to copy it in hadoop user/root folder)

hadoop fs -copyFromLocal Documents/Test.txt hdfs://localhost/user/root/Test.txt

Here you may see the connection refused error if you are using Cloudera hadoop version (CDH4)

In this case you should use host name instead of localhost as below (here smr01 is my hostname)

hadoop fs -copyFromLocal Documents/Test.txt hdfs://smr01/user/root/Test.txt

Now you can see that your file has been copied in HDFS